My current project is focused on building a corporate simulation to use as a multi-turn RL environment for LLMs. The goal is to simulate market dynamics with semi-optimal competition. This can then be used to generate realistic tasks in finance, data analysis, and general corporate strategy that require multi-turn interactions + tool calling with costs.

More to come!

Research

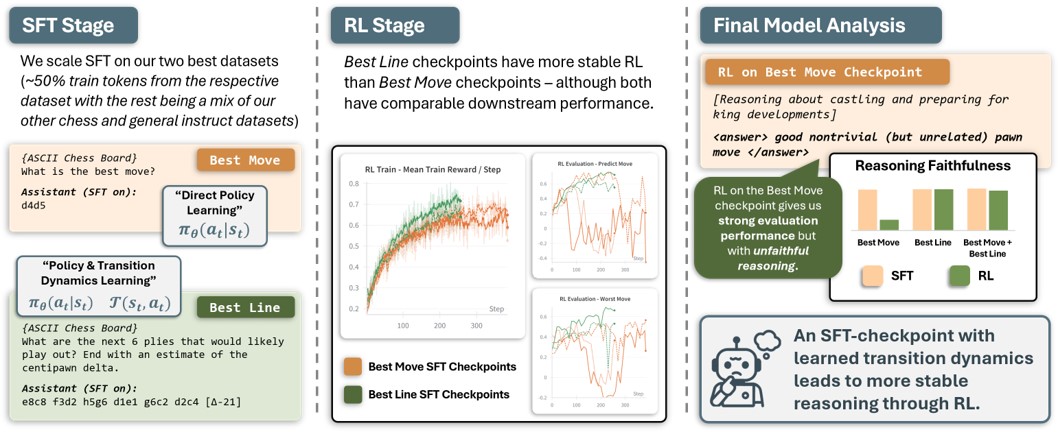

Reasoning Through Chess: How Reasoning Evolves from Data Through Fine-Tuning and Reinforcement Learning

NeurIPS 2025 FoRLM Workshop (Oral)

Lead author. Outperformed state-of-the-art open-source reasoning models in chess through SFT and RL on a 7B-parameter language model. The key focus of this work was to study how fine-tuning influenced post-RL reasoning (both quantitative and qualitative performance) using custom theoretically-inspired datasets.

Under review for ICML 2026.

Under review for ICML 2026.

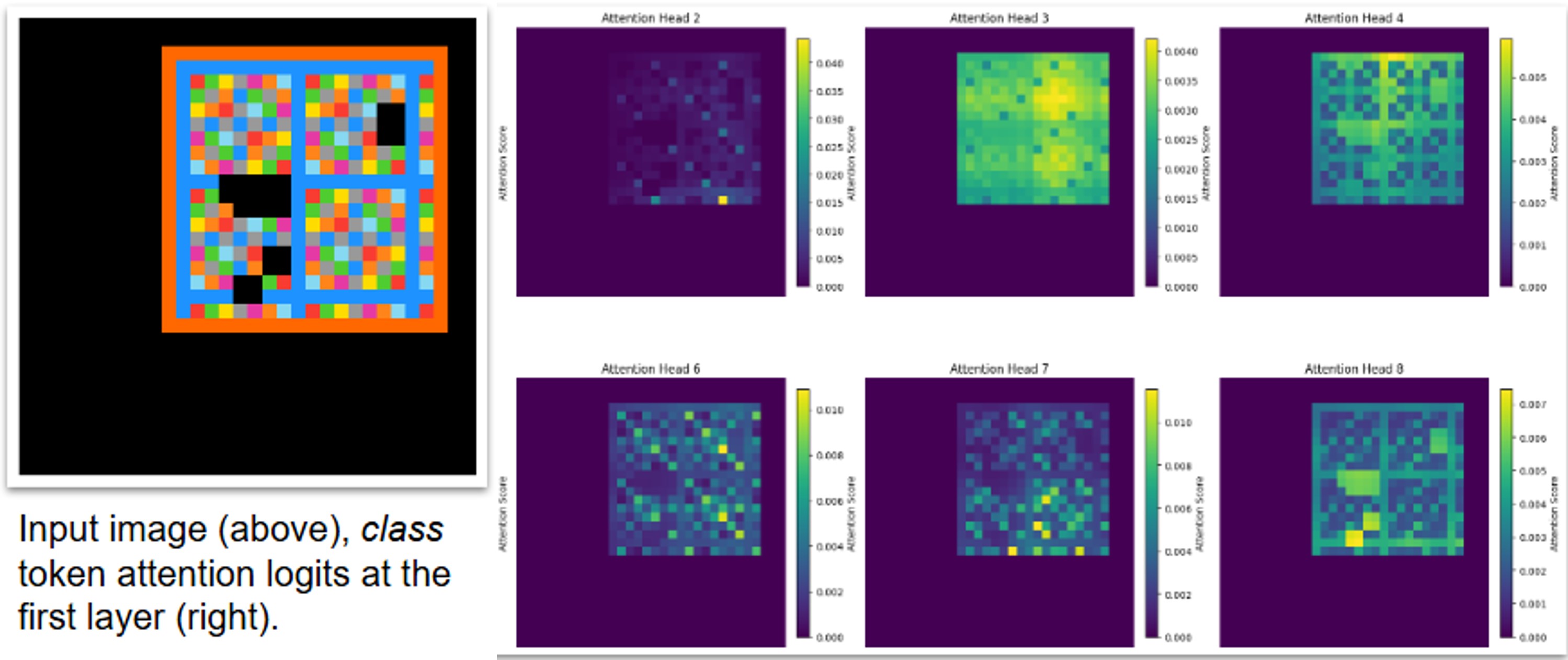

A* Neural Guided Search on ARC-AGI

Trained an image embedding model from scratch (modified iBOT) using a synthetic data generation pipeline to combine with a probabilistic context-free grammar (PCFG) in a neurosymbolic programming approach for ARC-AGI.

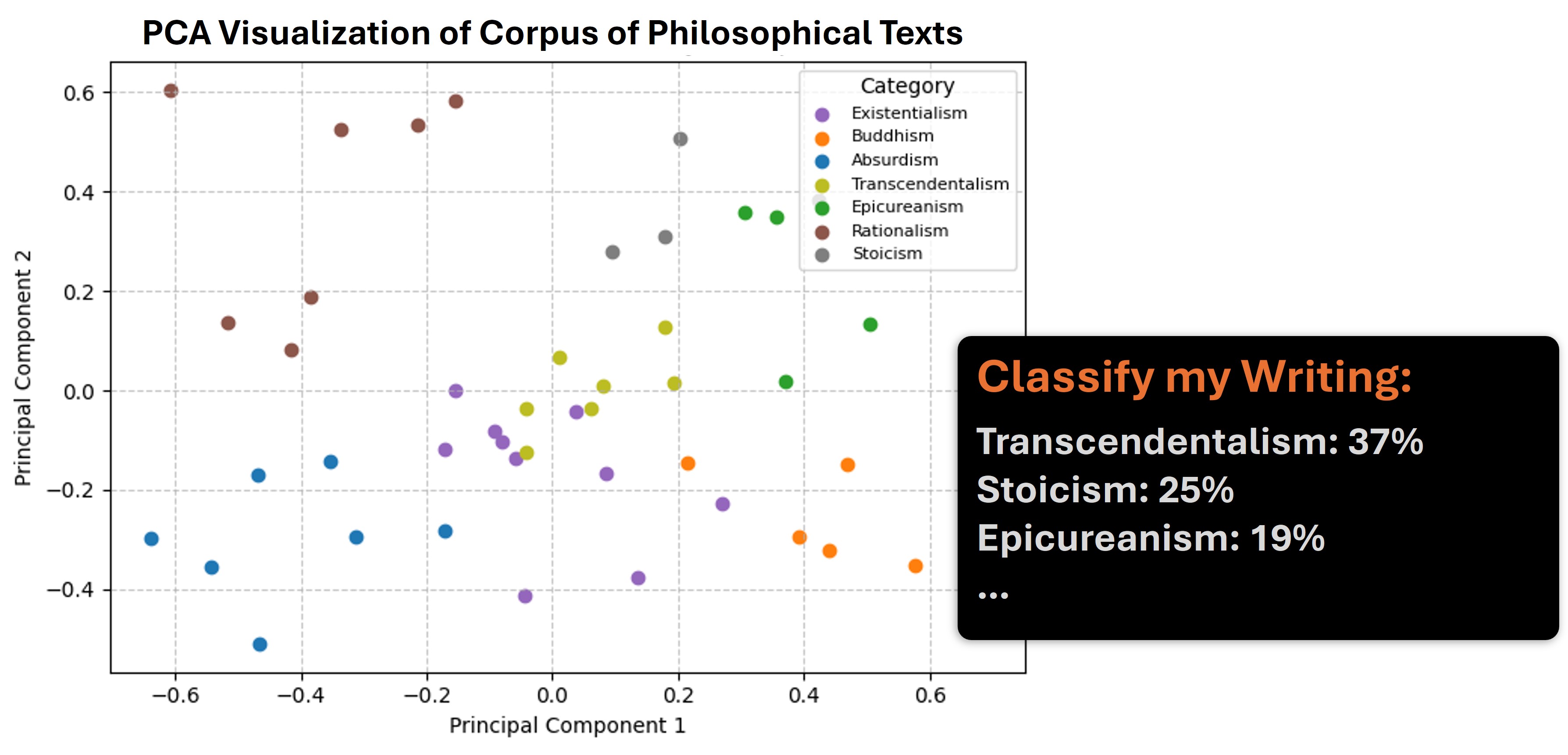

Philosophize That

Created a corpus of philosophical texts using various techniques to isolate signal from correlated noise and trained a model using document-level embeddings to classify the philosophical basis of my own journal entries.